Response Time Evaluation

Response (retrieval time) evaluation measures how much time it takes to carry out tasks such as navigation or browsing links, and searching or obtaining resources. It is calculated as the average time that a digital library takes to process all requests. The average response time is:

Average response time = (∑ sti )/n (sti = time that a digital library takes to process a task, n = all tasks number)

Equation 1: Average Response Time

Methodology of Response / Retrieval Time Evaluation

In detail, Response/Retrieval Time Evaluation Criteria measure the response time that takes to access to all included links in the home page (it is called as ‘link response time’), and that takes to show results for a query in a search engine (it is called as ‘search response time’).

Link Response Time

First, link response time is to measure how much time does it take to access to all linked websites in a home page of a digital library. That is, link response time measures all accessing and responding times to all links of a home page in a digital library. Then, an average of link response time will be calculated for each digital library of the candidate digital libraries.

Average Link response time = (∑ sti) / n (sti= time that a digital library takes to access and response to a link of a home page, n =Number of all links in a home page)

Equation 2: Average Link Response Time

Search Response Time

Search response time measures how much time a search engine takes to respond and retrieve search results to queries. Search response time measures how long it takes to upload search results, when a query is inputted in its search engine of a digital library. Since Zhang and Si point out search response time might differ for different types of queries (Zhang & Si, 2009), a program is designed to choose different queries for each digital library from the words of a home page of digital library. It uses a standard stoplist (e.g., symbols, prepositions, and other unnecessary words). That is, each digital library will have different queries depending on its subject domain and its content. And, search response time is measured by response time for all queries (all words except stoplist) in a home page of each digital library. Then, an average of search response time will be calculated for each candidate digital library with the below equation.

Average search response time = (∑ sti )/n (sti = time that a digital library takes to upload/respond search results for a query (word) in a home page, n =Number of all queries in a home page)

Equation 3: Average Search Response Time

Outline of the Response Time Evaluations Methodology

With link and search response time criteria, outline of the response time evaluations is:

- An average link response time is measured with accessing times to all links in the source code of the home page of each digital library by Equation 2.

- Next, averages of search response time will be calculated for each digital library of sixty two digital libraries. Search response time of search engines measures the response time for queries by Equation 3. Queries are created from all words in title <title> and paragraphs <p> in the source code of the home page of each digital library, filtering out common stop words (e.g., symbols, prepositions, and other unnecessary words).

- Lastly, two averages of the link and search response time will be calculated as an average response time for a digital library, because it should be one value for a response (retrieve) time evaluation.

An Average response time for Response time evaluation = (an average link response time + an average search response time) / 2

Equation 4: An Average Response Time for Response time evaluation

The Detail Procedure with the Designed Computer Program

A program is designed with Python Programming Language to implement the methodology for evaluating the response time,. The program measures automatically link response time and search response time.

To measure link response time, the designed program does:

- First, the program finds all links from the source code of each digital library’s home page.

- Next, it measures the time, how long it takes to access to the linked websites.

- Lastly, link response times of all links are summed up as a total time. The total time is divided by the number of all links, to calculate an average for each digital library.

To measure search response time:

- First, the program makes queries for the search engine from words of<title> and <p>in the source code in the home page of each digital library. The words in <title> and <p> are investigated whether they are prepositions, signs, or meaningless words to filter out common stoplist. If they are in the common stoplist, they are not included into the query dictionary. Except them, the other words are saved into the query dictionary as queries.

- Next, to measure search response time, each query in the query dictionary is inputted into a search engine of each digital library. To input queries into a search engine, search engines of digital libraries are investigated: how digital libraries implement their search engines, and how queries of the query dictionary can be inputted automatically into the search engine. According to the investigation, the program for search response time is designed.

- Then, the response time that takes to upload the searched results is measured for all queries.

- An average of search response time is finally calculated.

Next, an average of response time evaluations for a digital library is calculated by the average of link response time and the average of search response time. Finally, the average is scored with 5-point scale method as other evaluations have been done.

Prerequisite

Before the experiment for the response time evaluations, the impact on locations of digital libraries was seriously discussed. If the location of a digital library is nearby Champaign and Urbana where the experiment is done, the response time must be shorter than far distance digital libraries. Thus, to reduce the impact on locations, the experiment was done several times with the server of Graduate School of Library and Information Science not using personal laptops or desktops. Also, considering time impact online, the experiment was done several times with various times.

Result of the Response Time Evaluation

Generally, about 74% of sixty two candidate digital libraries are evaluated by both link and search response time evaluations. About 18% of them showed only link response time, not being able to access search engines or not being able to input queries in the offered search engine. About 0.05% of them could not be evaluated by the program, because they cannot be accessible by robots.txt or by other reasons. Digital Past digital library cannot be evaluated at all, because it has broken links. Also, SMETE digital library, Database of Recorded American Music, and Analytical sciences Digital Library (ASDL) did not show any response times.

As a result,

- Southeast Asia Digital Library (SADL) digital library shows the shortest response time in link and search response time as 0.023540511727333069 second.

- ‘Chinese Philosophical Etext archive’ digital library shows 0.11893868446350098 response time.

- NASAs Visible Earth shows 0.1227287252744039 response time.

- Military History and Military Science of The Library of Congress shows 0.22897380122951433 second.

- U.S. Department of Health & Human Services HHS.gov shows 0.23830916853654305 second and so on.

It looks obvious that NASAs and Military digital libraries under the U.S. government show the fastest response times.

Analyses of the Response Time Evaluation

Before the experiment for the response time evaluation, the impact on locations and time zones are sincerely discussed. To reduce the impact on locations and time zones, the experiment was done several times with the server of Graduate School of Library and Information Science.

However, the result is somewhat different than we expected. The Southeast Asia Digital Library (SADL) and Chinese Philosophical Etext archive digital libraries show the shortest response time no matter how much their digital libraries are far from Champaign in Illinois in the U.S. Therefore, we can tell that the distance of the digital libraries does not effect on online response times.

Analysis based on Scores of Each Digital library

Overall, the response times of sixty two candidate digital libraries are very short. That is, we may conclude that many candidate digital libraries provide good response time services. As Nielsen points out, three digital libraries (Southeast Asia Digital Library (SADL), Chinese Philosophical Etext archive, and NASAs Visible Earth) show around 0.1 second response time that “the user feel that the system is reacting instantaneously.” Almost digital libraries provide the response time of more than 0.1 but less than 1.0 second, which “the user does lose the feeling of operating directly on the data.” Only one digital library shows over 10 seconds response time that “is about the limit for keeping the user’s attention focused on the dialogue” (Nielsen J. , Response Times: The 3 Important Limits, 1993).

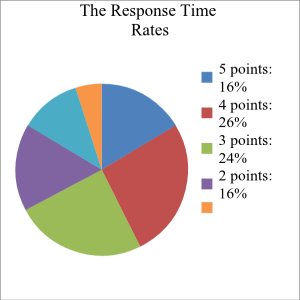

As Figure 1 shows, 24% digital libraries of sixty two digital libraries get 3 points out of 5 points (3: a digital library shows from 0.6 to less than 0.9 second’s average response time). Totally, 66% digital libraries show less than 0.9 second average response times.

Analysis based on Subject Domains

Digital libraries in Technology and Language subject areas show the fastest response times. Then, Military Science and Geography subject domains’ digital libraries show faster response times. It is obvious, because technology, military science and geography subjects are closely related to speed of response time.

*More details are in the paper, Chapter VI. Performance Evaluation, Chapter 1. Response/Retrieval Time Evaluation. This website and the paper are developed by the same person.